We all want to look marvelous.

We all want to look marvelous.

And in virtual worlds, we want our avatars to look marvelous.

In both form and motion, we want them to possess beauty and grace. We want people to gasp at their originality and for virtual heads to turn when we walk into a virtual room.

And we want them to look as lifelike as possible.

But there’s a catch. As our avatars march forward into a bright future of ever-increasing realism, we’re going to face a major obstacle.

The Uncanny Valley describes a phenomenon involving changes in our emotional response to simulated humans as the simulations become more realistic. When it was first written about back in the 70’s, it was initially applied to robotics. But over time, people noticed that it also applied to our emotional response to computer generated models of humans. Such as avatars.

It goes like this. As the realism of a simulated human increases, we tend to have an increasingly positive emotional response to it. But we eventually reach a point at which the simulated human looks “very close but not quite right.” And this causes a dramatic negative emotional response. We immediately feel revulsion.

Remember the movie Polar Express? That’s a perfect example of the Uncanny Valley. The characters are pretty realistic but seem a bit “off,” and we tend to feel creeped out by them in a very visceral way. Watch this clip and you’ll see what I mean. And I bet you can remember other movies involving computer animated humans that evoke a similar feeling.

Fortunately, there’s a bright side. Continue to dial up the realism, and we eventually pull out of the nosedive of negative emotional response. We start to feel good again about the simulated human we are observing.

This graph illustrates the effect.

A great deal has been written about the Uncanny Valley. There’s lots of discussion about it in video games, as well as research on its theoretical basis and the cognitive mechanism underlying the phenomenon. But for this discussion, let’s explore a couple broad solutions.

The obvious solution to the Uncanny Valley is to continue increasing the realism of simulated human beings.

Computing technology continues to increase in power. The graphics processing and rendering capabilities of the machines sitting on our desks makes it easier and easier to create lifelike simulations of human beings. Inevitably, we’ll reach a point where we simply cannot tell the difference between simulated humans and the real thing. You can already see this starting to happen in movies where CG actors are used as stunt doubles, executing action sequences that would be impossible for a real human.

But there’s another path through the Uncanny Valley. And it involves thinking deeply about what it really means to “look marvelous” to another human being.

The non-obvious solution to the Uncanny Valley is to make simulated human beings more accurately reflect how we see ourselves.

Scott McCloud is a brilliant cartoonist and theorist on the medium of comics. One of my favorite books of all times is his Understanding Comics, an amazing exploration of the vocabulary of comics and its use as a communications medium. It’s also an essential resource for anyone interested in designing graphic user interfaces. The fact that the book is disguised as a fun-to-read comic book makes it even better.

But while we’re busily noticing this “Otherly” quality in the person we’re conversing with, we are constantly maintaining an image of our *own* face in our *own* mind.

And this imagining of our own face is always much more abstract than the face of the person we are talking to. It’s cartoonish. When you imagine yourself smiling, you imagine a cartoonish version of yourself smiling without the nitty gritty details of your own face in reality.

McCloud argues it is for this reason that the simplicity and abstractness of cartoon faces makes them so appealing. We deeply empathize with them because they map directly to our imagining of our own face. Which explains why some of the most loved cartoon characters in the world are the simplest of all. We don’t see them as “Otherly.” We see them as a mirror of ourselves.

Success is usually found in happy mediums.

As I mentioned in a previous blog post, being human is what happens when human minds touch other minds. And building empathic understanding between avatars is a critical part of making those connections (which I discussed in yet another post).

So perhaps the way to best solve the Uncanny Valley in virtual worlds is to find a happy medium between increased realism and thoughtful abstractness for avatars.

Anime and manga artists figured this out long ago. If you look closely at anime and manga artwork, you’ll notice how the faces and body language of characters frequently shift between realism and crazy abstractedness.

Imagine applying a similar technique to avatars in virtual worlds.

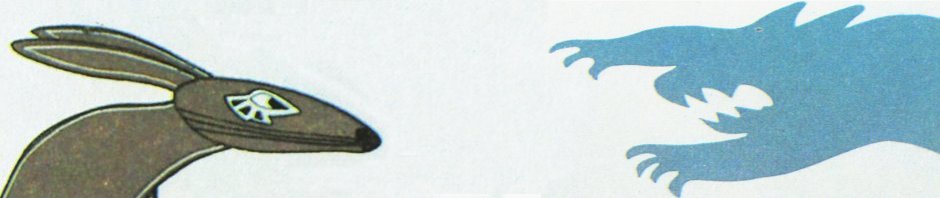

And this could work wonders for people using non-human avatars, since it is almost impossible for a truly realistic animal face to convey subtle human emotions. They simply lack the facial anatomy.

For example, the rabbit avatar you are communicating with might have a very lifelike and realistic appearance. But when Mr. Rabbit wants to convey a complex facial expression like distrust or skepticism, his face could subtly morph into an abstracted cartoonish expression that instantly evokes a sense of understanding and empathy in the observer. Clever designers of “furry” avatars in Second Life already use some of these techniques, and they are a great group of pioneers to watch for ideas.

We all want to look good. And a key part of looking good is to make it easy for other people to empathize with us. The development of avatars in virtual worlds gives us a fascinating opportunity to explore not only how we look, but how can cultivate other people’s reflections of themselves.

This gives new meaning to Billy Crystal’s memorable quote:

“When I look into your eyes, darling, I see the reflection of me. Look at me dancing around in there. I look marvelous! Absolutely marvelous!”

-John “Pathfinder” Lester

Pingback: Tweets that mention You Look Marvelous | Be Cunning and Full of Tricks -- Topsy.com

I really enjoyed your post! Scott McCloud’s books were my initial comic-creation teachers.

Another related factor is what Marshall McLuhan called “hot” and “cold” media. In a nutshell, the more detail there is in a form of media, the less we are immersed within it, because immersion comes when we “fill in the blanks” with our own mental models and imagination.

Thanks Botgirl. Good point about McLuhan’s work. I always find it amazing how applicable his work is to new media, given that he wrote most of it back in the 1960’s.

Really enjoying your blog posts, thanks for sharing your insights!

Back in 2004 I read a very insightful blog post by a pro animator, Ward Jenkins, about just why The Polar Express is so unsettling and how simple it would be to have a human animator go through the film and add life back to the ‘dead’ mo-capped faces. That post can be found here: http://wardomatic.blogspot.com/2004/12/polar-express-virtual-train-wreck_18.html

It’s led me to believe that the ‘uncanny valley’ is realistic looking humans without realistic facial expressions, given Polar Express is so often cited as the prime example of this and suffers so much from static eyes on faces with motion capped mouths.

What virtual worlds really need is something that parses emotion and intention in our communications well enough to reflect this in our avatars’ faces and body language (just as you pointed out in your post on Snowcrash). I’ve found a simple set of gesture animations tied to emoticons are enough to go a long way towards this, even if it’s just every 🙂 😦 :O or 😛 resulting in an appropriate facial expression.

I wonder if anyone out there’s already come up with a good set of face and body language animation gestures tied to emoticons and common phrases designed to tie our avatars more closely to our intentions…

I’m happy you are enjoying my posts, and thank you so much for pointing out that excellent piece by Ward Jenkins. I had never seen it before. It’s amazing how much a talented animator artist can breathe life into something. Very enlightening to read about his tricks of the trade.

I’ve seen sets of face/body language gesture animations for avatars in Second Life, but nothing very comprehensive. Plus, the current avatar mesh in Second Life doesn’t really support complex facial expressions. And then there are the usual emotes you find in various MMOs, but those are usually pretty primitive in my experience.

What I’d love to see is a sophisticated tool like Valve’s Faceposer that could be adapted to a virtual world for driving cued avatar face/body language changes in real-time. That would allow you to create an amazing expressive range.

I never really considered the phenomenon this deeply before, but it does bear out. Then again, I’m an anthropomorphic llama and people tell me I’m handsome all the time – go figure that one out, I sure can’t. 🙂

Great post, Pathfinder – I always find this topic fascinating. I like Nat’s idea about it being related more to the animation/movement on the avatar/robot’s face rather than precise reproduction of pores and moles, and it seems to me this fits well with the ideas that you bring in from McCloud about the abstracted, simplified human face we construct in our minds. That is, perhaps what allows cartoon characters to become so endearing and “human” is not just the abstraction, but the way that lets us focus on the portrayal of affect: either a static image of a well-captured expression of surprise on Dagwood’s face, or the essentialized version of sorrow moving across the face of a toy in Toy Story. Affect being so closely related to physical embodiment, which is happening in space *and* in time. I’ve been more captivated by a fleeting, minimal smile crossing an avatar’s face than by more complex expressions that just didn’t happen the right way. So maybe the work to be done with affect in SL is not just the mesh itself, but the subtle programming that goes into timing. Dunno.

A couple of not-so-recent clips that nearly cross the valley, for me:

Meet Emily: Her face is no less immobile than any other botox user, but the transitions and “attack/decay” on the emotions feel real.

This Wonderful Life: A more cartoonish face, but I feel myself pulled into an empathic connection with a subjectivity that feels real to me (the baby, on the other hand, never quite works).

Thanks for the additional thoughts. Meet Emily is simply amazing. It’s incredible how sensitive we are to those attack/decay transitions. Important in leveraging our uncanny human ability to sense and interpret things like microexpressions, too.

I strongly feel the future involves us blending the right mix of realism, abstraction and timing. And along the way, we’ll be defining a new language of nonverbal social cues for human beings. That’s a project I’d love to dive into. 🙂

Pingback: Twitted by JennFor

Pingback: The Lattice of Coincidence | Be Cunning and Full of Tricks

Hi Pathfinder,

Loved your post, the links within it and the other posted comments. Reading your post today made me think about a number of episodes of ‘Designing Worlds’ I watched recently on Treet.tv (a program I love watching because of the intelligent and articulate comparing done by the two hosts and commentary by their guests).

The first thing that struck me about the avatars of both the hosts and guests was the amount of detail that has been put into customizing them. While not necessarily making them more ‘lifelike’, it certainly does convey a strong sense of individual personality.

The next thing that struck me was the rather static nature of the avatars as they asked or answered questions. There is almost no movement apart from the mouths as they speak (and the eyes which move automatically), and even then, in some cases the mouth animations didn’t work which in closeup shots made the avatars seem little more than a static picture and felt ‘unnatural’ when you could hear the voice supposedly coming from the particular avatar. However, when the mouth animations were working you do have a feeling of the avatars being more ‘lifelike’ and there being a live person behind them. Lip syncing in SL is pretty well established and standard in SL now, and certainly goes a long way to making the avatars more lifelike without being overly complex.

The next thing that struck me about how lifelike or otherwise the program’s hosts or guests were is the lack of any expressive body movement. In some cases the furniture the avatars were sitting on had inbuilt animations, which while making the avatars move is, I personally find, very annoying and distracting. It is movement, but it is also a relinquishing of control over the limited movement avatars already have in SL (such as the head tracking cursor movements) and very de-personalizing. I will usually not sit on furniture with inbuilt animations in it for this reason (it makes me less rather than more expressive). I also noticed that neither the hosts nor the guests use the speech animations provided by SL. This may be for technical reasons (i.e. it causes lag when the program is being filmed), or personal preference. I guess not everyone likes the animations provided, but they at least do make avatars more animated and interesting when using voice. What I would love to see is a wider range of standard speech animations being developed that reflect the kinds of body language that many of us use in everyday life. Even some culturally based movements would be good (i.e. certain cultures, like the Italians, are well known for being very expressive with their hands) in terms of adding to the rich tapestry of possible body language for avatars. This might be far simpler to implement than facial expressions, which I imagine are more complex. Interestingly, I have found that my students rarely ever look at the facial expressions of other avatars, which requires some skill to do in terms of zooming in on the face and which is something they are not really interested in doing.

Even facial expressions don’t necessarily need to be that complex. The simple ability for my avatar to smile when I smile, frown when I frown and even maybe wink when I wink would go a long way to making our avatars more expressive. There are webcam software programs out there that have tried to capture a limited range of facial feature movement of the user, but so far these programs don’t seem to really work that well. There was one software program (unfortunately I don’t recall the precise name) that enabled you to control the angle of the view of the avatar by movement of your head which is captured by a special webcam, but when I asked it only worked with certain computer games, not with SL. Even being able to make your avatar nod in agreement or shake its head in disagreement without having to use the keyboard would be a great thing.

I think for me what I want more than avatars being more ‘lifelike’ is them being more ‘expressive’. This would include some facial expression, but I think for me more importantly it would include more nuanced and varied body language which can be seen even without the need to zoom. The simplest way to implement this initially might be through additional inbuilt speech animations. Eventually I would love to see some kind of haptic interface where movement of my body parts (my hands, arms, head) would trigger similar movements in my avatar. This would give our avatars a much greater degree of expressiveness, without necessarily making them more ‘lifelike’.

One final comment. Going back to the hosts and guests on ‘Designing Worlds’. What really makes them feel ‘lifelike’ to me at the moment given the limitations I described above is the very fact that the people behind the avatars are incredibly articulate and intelligent. More than anything this is what ‘brings them alive’ for me.

Sorry for the long post.

Cheers,

Scott

Really interssting post, Pathfinder. So glad to have found your blog site. As I was reading the opening paragraphs, I thought to myself – “yeah, just like in The Polar Express..” and then you linked to it. Also appreciated Botgirl’s reminder of McLuhan’s work and how much more immersive the experience is when we are asked to fill in the blanks. I’ve been doing a lot of work with new Second Life avatars in the past few months and I’ve noticed a predicatable pattern with them….in the first session, they consistently want to make their avatar look more like them. I hear things like “I’d like her to have blonde hair….” or “How can I make his skin darker?” ….or “This outfit is not something I would wear….” And then, once they settle into a comfortable look, they stop investigating their appearance. If I press to urge further avatar modification or make suggestions along that line, I typically get waved off. After reading your post, I’m thinking that maybe they’ve gotten to the point on the curve where the connection is close enough for comfort but not so close as to teeter on the edge of the uncanny valley.

Interesting observation!

Watched the clip. No visceral response. No sense that any of the characters felt ‘wrong’. Obvious that they’re artificial but no negative response to that.

But then, I’m a bit odd.

I thought the same, Tateru.

I also believe that some people (like those on the Autistic Spectrum) who do not rely as much on Body Language actually prefer less reality on this issue and don’t seem to notice the valley.

Wow, Pim, fascinating observation. I have only met a few avis in the Autistic Liberation Front and related builds but I don’t know if that topic has come up. I know very little about autism but I can see how dynamically realistic avatars would be a buzzkill if you’re already operating at sensory overload. Daft & Lengel’s “media richness” theory (q.v.) might have some application here.

I mostly rely on textual descriptions of expressions and gestures (not smilies/emoticons), which I find mostly misleading, and I frequently provide the same.

Avatar facial expressions, lip-synch, animations and emotions are all issues I think of constantly in filming – How to maximize what looks great about us, and minimize the lack of real emotive gestures, looks – How to get a turned head to convey meaning, a sideways glance to indicate interest, how to get a subtle read on “what an avatar is thinking” yes, challenging………

I have often thought the basic raising of eyebrows, angled above our eyes in so many cartoons, their vectors signaling, surprise, annoyance, interest would be fantastic.

I would love to have more eyebrow control (I know we can manage some small changes, but it would be great to just arch one eyebrow. Eh?)

Cartoon gestures are a somewhat limited vocabulary, but they get the job done. I would love to see more emotion on an avatars face, for film making it would be fantastic.

We do however, have that ability to project onto the other in cartoon form. “South Park” is something which is hugely entertaining and they are cardboard cutouts, not next generation animation certainly. The somewhat limited facial vocabulary works in a variety of ways, there are cues which we instinctively respond to and it is the value of the writing, plot and characterization which makes it so watchable.

Just as it is the value of our human interactions, or the enjoyment of watching Elrik & Saffia on Designing Worlds or the vast arena of human emotion from which we project and infer connection that also makes virtual worlds so important to us.

Is what we infer from emoticons and text, because of how they look, or because of how we read them? How we allow them to make us feel?

I just remembered this comic I created on the topic in May of 2009. The concluding line was: “You can not look into another being’s soul through the eyes of their avatar.”

Good point, Botgirl (and nice comic). I do agree that we can see microexpressions and rich nonverbals in RL, but I don’t know if the research supports their authenticity as evidence of what’s going on inside. Sometimes yes, sometimes no; not just when we are trying to hide things but also through a variety of incorrect stereotypes or myths about what various nonverbals mean. I think the accurate reader of nonverbals relies a bit on a history of interaction and a depth of knowledge of the other; that says to me that in SL I might be able to learn to “read” quite a bit from things my friend’s avatar is doing (though the bandwidth is narrow). This gets back to the notion that diachronic behavior rather than physical semblance generates that sense of reality.

My second thought is that your statement reminds me of what George W. Bush said about looking into Vladimir Putin’s eyes and seeing into his soul. I think there’s a lot that I can get from looking into someone’s eyes, but it’s unreliable.

Do you think its possible to hide ones true character online?

After seeing someone change their av 30 times in front of me one night, going from one folder to another I realized that no matter what the avatar looks like, it is merely wrapping for our souls. Possibly as our outworld skins are too.

“On the Internet, everybody knows you’re a dog” (a slightly earlier version of the now more-famous quote)

It’s *possible* to do (it’s certainly no harder than doing it offline). The basic thrust, however, is that if you keep talking about bones, marrow-bone jelly and flea-collars, people are eventually going to conclude that you’re a dog.

Under normal circumstances, though, I find few people make an effort to obfuscate. Oh, they won’t necessarily give you their offline name and street address, but folks are generally pretty open about their circumstances, experiences and all manner of other minutiae of their lives – things they wouldn’t normally talk about offline – because the costs and risks of revealing that information are much lower, so long as they can control access to key pieces of information (eg: offline first/last name, street address, phone number, and so forth).

Having privacy isn’t about concealing or revealing information, it’s about being the one who makes the choices about whether, when and what to reveal. As long as you’re in control, you have privacy no matter how much you choose to divulge.

Usually, people who are attempting to actively hide their true character either (a) fail, or (b) if they never break character then it doesn’t actually matter. If the jerk doesn’t act like a jerk, does it make a noise? (Whups. Wrong mixed metaphor).

I knew someone who role-played a teacher at NCI in SL. It was, quite literally, a game to her. However, she never broke character during class, she prepared her class-materials carefully, she imparted real knowledge and skills to class attendees, and answered their questions. I asked her about it once, and pointed out that she said “Of course I’m no teacher! I’m just role-playing one!”

And I wondered just how different that is from what people normally do offline.

If someone’s doing the right thing, does it matter what reason they’re doing it for? Some people will tell you yes that it absolutely does, and some people will say no, and most of you will point out that I’ve wandered considerably off-topic, and thanks, Tat… but can you go get a coffee or something and stop wandering off on narrative tangents like this?

The legendary ramblings of Tat are appreciated…

I do find that people want to spend a lot of time on SL and so find ways to do good in order to make that happen. We need rewards for positive social behavior and in SL the reward is actually being there. The teacher reminds me of this kind of thing.

But to be honest – when the motivation is here to the degree we know it to be in SL where does the need for facial realism lie? In other words – What we feel we want to do is important, and we give of ourselves.

Is it as important what others see?

If we are all able to comprehend each other, valleys uncanny or otherwise might not be the most important thing. It’s the connection, it’s the new abilities we can also demonstrate, it’s the intelligent conversations or even the silly ones which are important.

(From a film making point of view or TV type entertainment – I want much more range however I realize that being able to finesse subtle emotional cues as they flit across the face of my friends just doesn’t mean that much because I can sense through their words and voice how they feel. The camera however needs the better facial expressions.)

Pingback: 1-Bit Symphony and the Art of Constraint | Be Cunning and Full of Tricks

Hi, Neat post. There is a problem with your website in internet explorer, would check this… IE still is the market leader and a huge portion of people will miss your excellent writing due to this problem.

I’ll check on that. Thanks.

Pingback: Augmented Cities and Dreaming Wisely | Be Cunning and Full of Tricks

Pingback: The Quest for the Uncanny Valley : Through Graves It Breathes